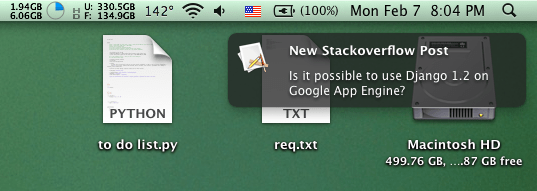

I’m a little obsessed with constantly refreshing stackoverflow.

I can’t find an API for it (I’ve since found the Stack Exchange API, oops), so I wrote a little python script to parse it for new questions as an exercise.

I built a fetcher class that builds an SQLite database (if one doesn’t exist), parses stackoverflow for questions relating to django, and determines if any are new (based on the URL being stored in the database) and does a Growl notification if found.

It’s extremely straight forward, but this shows you the power of python. From idea to implementation in no time at all, including figuring out how Growl’s python bindings work.

Simply amazing!

"""

"""

Stack Overflow Post Checker

===========================

Parse stackoverflow HTML for questions, store in sqlite database

and send notifications of new questions.

By Yuji Tomita

2/7/2011

"""

import os

import sqlite3

import urllib2

import BeautifulSoup

import Growl

class StackOverflowFetcher:

def __init__(self):

self.base_url = 'http://stackoverflow.com/questions/tagged/'

self.get_or_create_database()

self.growl = Growl.GrowlNotifier(applicationName='StackOverflowChecker', notifications=['new'])

self.growl.register()

self.tags = [('django', True), ('python', False)]

self.get_questions()

self.close_connection()

def get_questions(self):

"""

Parse target URL for new questions.

"""

while self.tags:

tag, sticky = self.tags.pop()

url = self.base_url + tag

html = urllib2.urlopen(url).read()

soup = BeautifulSoup.BeautifulSoup(html)

questions = soup.findAll('h3')

for question in questions:

element = question.find('a')

link = element.get('href')

question = element.text

if self.is_new_link(link):

self.growl.notify(noteType='new', title='[%s] StackOverflow Post' % tag, description=question, sticky=sticky)

self.record_question(link, question)

def get_or_create_database(self):

"""

Check if database file exists. Create if not.

Open file and send query.

If query fails, create tables.

"""

path = os.path.join(os.path.dirname(__file__), 'questions.db')

try:

f = open(path)

except IOError:

f = open(path, 'w')

f.close()

self.conn = sqlite3.connect(path)

try:

self.conn.execute('SELECT * from questions')

except sqlite3.OperationalError:

self.create_database()

def create_database(self):

self.conn.execute('CREATE TABLE questions(link VARCHAR(400), text VARCHAR(300));')

def is_new_link(self, link):

results = self.conn.execute('SELECT * FROM questions WHERE questions.link = "%s";' % link).fetchall()

if not results:

return True

return False

def record_question(self, link, question):

results = self.conn.execute('INSERT INTO questions(link, text) VALUES ("%s", "%s");' % (link, question))

def close_connection(self):

self.conn.commit()

self.conn.close()

stack = StackOverflowFetcher()

This code is now on GitHub where it will be more updated.

https://github.com/yuchant/StackOverflowNewPostCrawler

I think the self.add_question in line 36 should be self.record_question?

OK; when I run this, the text column is not created, which implies the question is not recovered from the screen scrape. I changed line 30 to read

question = element.renderContents()

and that seems to work.

PS Any reason you chose “stack_overflow.db” as the name? The SQLite plugin for Firefox uses “.sqlite” as the default extension it looks for (which one can obviously change, of course…)

PS: I notice now .text is not in the BeautifulSoup docs. Hm? Where did I pick that one up?

I have made a small modification to run this under Ubuntu 10.04. All the references to Growl can be removed and line 35 can be replaced with:

os.system(‘notify-send “%s” -t 1’ % question)

And another small modification…

os.system(‘notify-send “Django on StackOverflow” “%s” -t 1 -i ‘/user/local/share/icons/django.png′ % question)

will create an alert with a suitable title, and a neat icon to the left of the message. (You will need to download and save your own icon in a suitable location.)

Ubuntu, you dawg.

Hey Derek,

Thanks for the feedback! The method name has been updated.

as for stack.db, I think I picked up the habit arbitrarily from naming my databases db_name and it was sprint style coding : )

“Hmm, I really hate refreshing stack.. time to write something!”

I haven’t gotten around to the stackexchange api yet, but I do use BeautifulSoup all the time. I swear you can get to parsing what you need with a 3 minute python shell session and experimentation.

I liked how the thoughts and the insights of this article is well put together

and well-written. Hope to see more of this soon.

I have read so many posts regarding the blogger lovers except this post is in fact a fastidious paragraph, keep it up.

Definitely believe that which you stated. Your favorite reason appeared to be on the net the

easiest thing to be aware of. I say to you,

I certainly get annoyed while people think about worries that they just don’t know about. You managed to hit the nail upon the top as well as defined out the whole thing without having side-effects , people could take a signal. Will probably be back to get more. Thanks

Hi! I could have sworn I’ve been to this site before but after going through some of the articles I realized it’s new to me.

Anyhow, I’m certainly delighted I came across it and I’ll be book-marking it and checking back regularly!

I really enjoy this unique blog. It is for me a secret haven

where I always discover something that interests and enchants me.

Hello! This is kind of off topic but I need some help from an

established blog. Is it very difficult to set

up your own blog? I’m not very techincal but I can figure things out pretty quick.

I’m thinking about setting up my own but I’m not sure where to

start. Do you have any points or suggestions?

Many thanks

No creia leer un articulo aasi en esta pagina pero me ha dejsdo

bastante sorprendido con el de hoy

Vale, no estoy plenamente deacuerdo con lo comentado, sin embargo si estoy deacuerdo el fondo.Un saludo!

Buenas,

Seguramente es la unica vez que he leido tu blog y tengo que comentar qque

esta bastaante bien y creo que me tendras con frecuencia por eetos

lares.

😉

This is a great tip especially to those fresh to the blogosphere.

Simple but very accurate information… Thanks for sharing this one.

A must read post!

Fine way of describing, and good article to get data regarding my presentation subject, which i am going to deliver in university.

You ought to be a part of a contest for one of the most useful blogs on the internet.

I am going to recommend this site!